This is NotThe Blog you are looking for…

Welcome to NotThe.Blog! NT.B (or, inconsistently, NTB) is a personal blog covering virtualization (mostly VMware). This is “NTB v2.0” and marks the move away from the original reverse chronological linear blog format to a Digital Garden approach of posts, articles and notes with a greater emphasis on linked content. You can learn more about this approach through the “About” page.

Depending on your device screen size you’ll find the latest posts and a site navigation on the left (or at the bottom) of this page. Listed below are (hopefully) interesting snippets, links to posts and other updates. If this kind of thing is what you came here for, welcome, enjoy, and tell your friends!

If not, you can safely move along as this is, not the blog you are looking for…

VCF 9.0 Subnet Types

🗓️ 2025-10-14

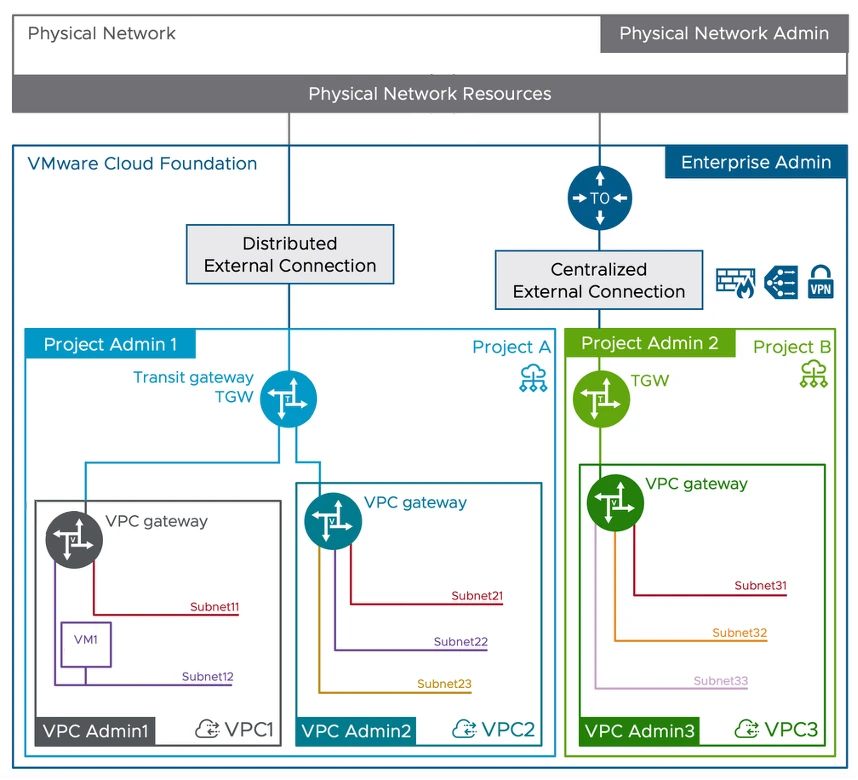

Within VCF Networking in v9.0 there are several types of networks which can be created by the VPC Admin when granted permission and resources by the Project Admin. That is, as long as there are sufficient resources allocated to the Project by the Enterprise Admin. Wait? What? Who the heck are all these people and where did they come from?

In this post we'll take a look at each "persona", figure out if we have enough desks for them, and then see how those networks types differ. Finally and arguably most usefully, we'll explore if, and how, each network type can connect to other parts of the SDDC and, to the outside world. Along the way, we'll see how their IP addresses are managed and probably find a NAT or two hiding in the shadows...

Weekly VMware KB Article update list

🗓️ 2025-07-28

One of our colleagues posted a (public) link into an internal chat which produced a list of all the VMware Knowledge Base articles which had been updated/published to the public Broadcom Support site within the last week.

While anyone can do this on the page itself, the number of categories to select and faff around with make this a little tedious so, as I thought this might be useful, here's an easier to type/remember *go-link* to the page / query to save you the trouble.